Disruptively testing parts of neural networks and other ML architectures to make them more robust

(Image generated by author using DALL-E). Interesting what AI thinks of it’s own brain.

In a similar fashion to how a person’s intellect can be stress tested, Artificial Neural Networks can be subjected to a gamut of tests to evaluate how robust they are to different kinds of disruption, by running what’s called controlled Ablation Testing.

Before we get into ablation testing, lets talk about a familiar technique in “destructive evolution” that many people who study machine learning and artificial intelligence applications might be familiar with: Regularization

Regularization

Regulariztion is a very well known example of ablating, or selectively destroying/deactivating parts of a neural network and re-training it to make it an even more powerful classifier.

Through a process called Dropout, neurons can be deactivated in a controlled way, which allow the work of the neural network that was previously handled by the now defunct neurons, to be taken up by nearby active neurons.

In nature, the brain actually can undergo similar phenomenon due to the concept of neuro-plasticity. If a person suffers brain damage, in some cases nearby neurons and brain structures can reorganize to help take up some of the functionality of the dead brain tissue.

Or how if someone loses one of their senses, like vision, oftentimes their other senses become stronger to make up for their missing capability.

This is also known as the Compensatory Masquerade.

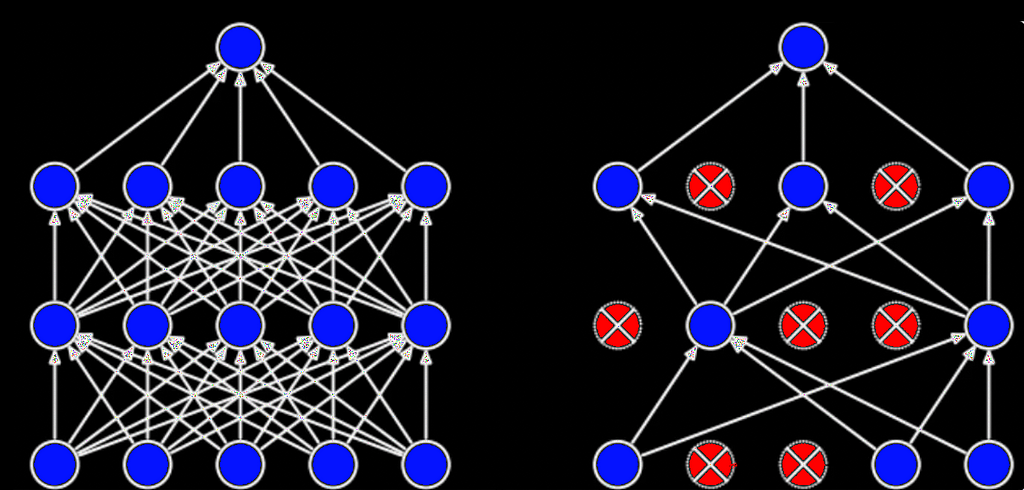

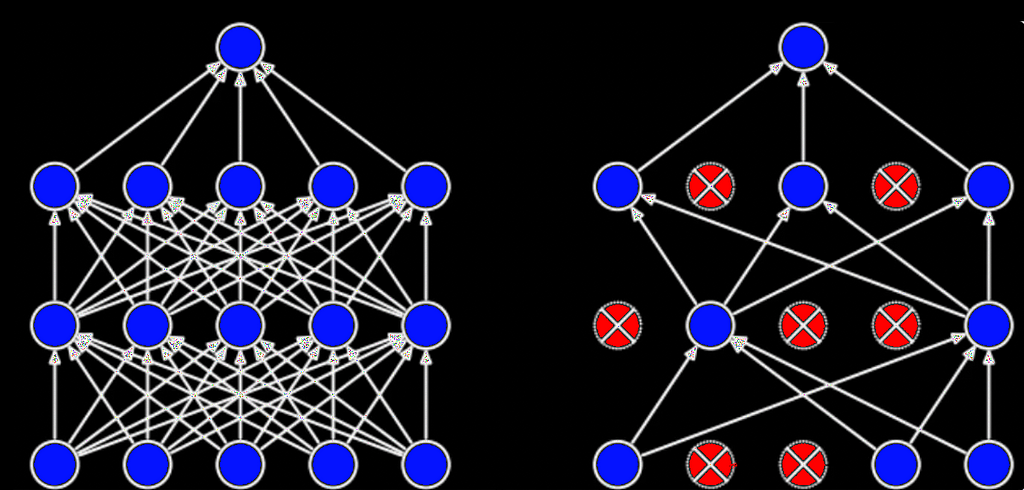

A fully connected Neural Network to the left, and randomized dropout version on the right. In many cases, these networks may actually perform comparatively well (Image by author)

Ablation Testing

While regularization is a technique used in neural networks and other A.I. architectures to aide in training a neural network better through artificial “neuroplasticity”, sometimes we want to just do a similar procedure on a neural network to see how it will behave in the presence of deactivations in terms of accuracy.

We might do this for several other reasons:

There are actually many different kinds of ablation tests, and here we are going to talk about 3 specific kinds:

A quick note that ablation tests will have different effects depending on the network you are testing against and the data itself. An ablation test might demonstrate weakness in 1 part of the network for a specific data set, and may demonstrate weakness in another part of the neural network for a different ablation test. That is why that in a truly robust ablation testing system, you will need a wide variety of tests to get an accurate picture of the ANN’s (Artificial Neural Network) weak points.

Neuronal Ablation

This is the first kind of ablation test we are going to run, and it’s the simplest to see the effects of and extend. We will simply remove varying percentages of neurons from our neural network

For our experiment we have a simple ANN set up to test the accuracy of random character prediction agains using our old friend the MNIST data set.

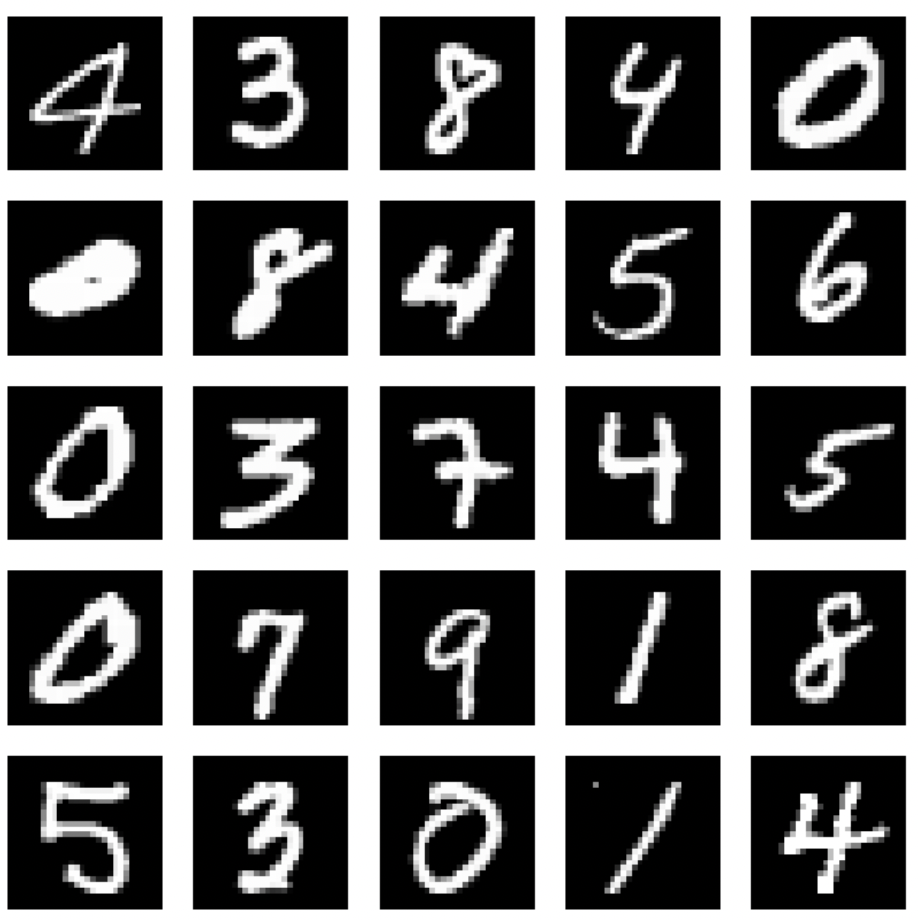

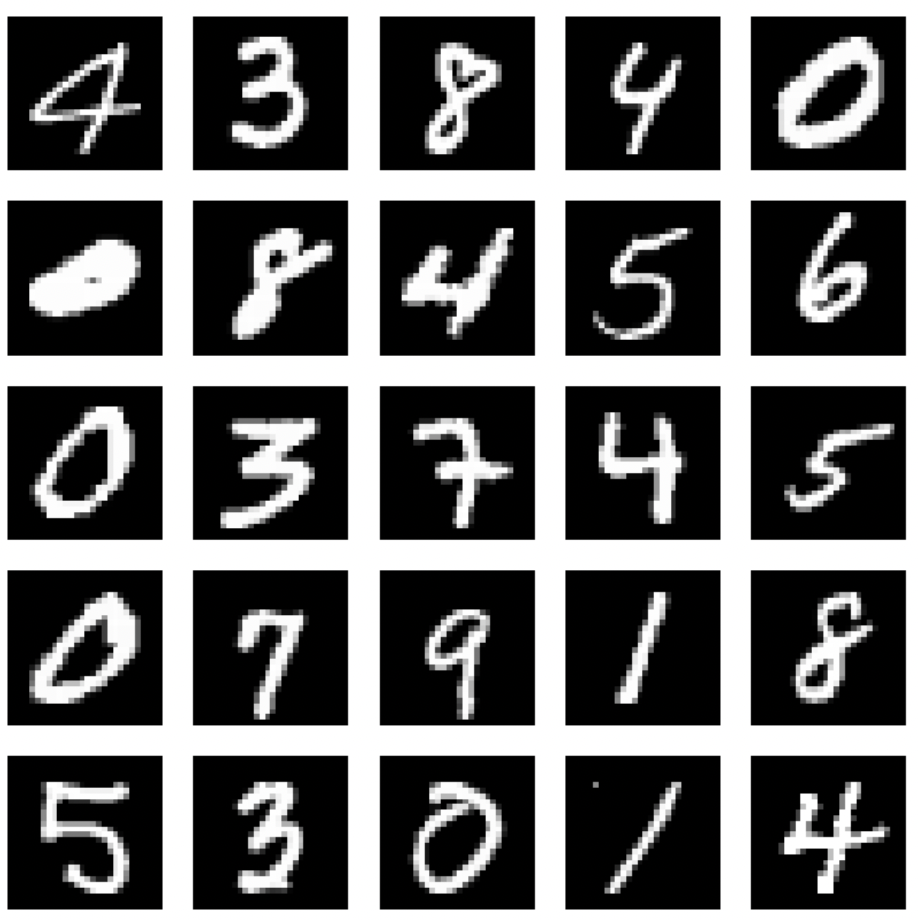

A snapshot of digit data from the MNIST data set (Image by author)

Here is the code I wrote as a simple ANN test harness to test digit classification accuracy.

import tensorflow as tf

from tensorflow.keras.datasets import mnist

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense, Dropout, Flatten

from tensorflow.keras.optimizers import Adam

import matplotlib.pyplot as plt

(x_train, y_train), (x_test, y_test) = mnist.load_data()

x_train, x_test = x_train / 255.0, x_test / 255.0

# Create the ANN Model

def create_model(dropout_rate=0.0):

model = Sequential([

Flatten(input_shape=(28, 28)),

Dense(128, activation='relu'),

Dropout(dropout_rate),

Dense(10, activation='softmax')

])

model.compile(optimizer=Adam(),

loss='sparse_categorical_crossentropy',

metrics=['accuracy'])

return model

# Run the ablation study: Dropout percentages of neurons

dropout_rates = [0.0, 0.2, 0.4, 0.6, 0.8]

accuracies = []

for rate in dropout_rates:

model = create_model(dropout_rate=rate)

model.fit(x_train, y_train, epochs=5, validation_split=0.2, verbose=0)

loss, accuracy = model.evaluate(x_test, y_test, verbose=0)

accuracies.append(accuracy)

plt.plot(dropout_rates, accuracies, marker='o')

plt.title('Accuracy vs Dropout Rate')

plt.xlabel('Dropout Rate')

plt.ylabel('Accuracy')

plt.grid(True)

plt.show()

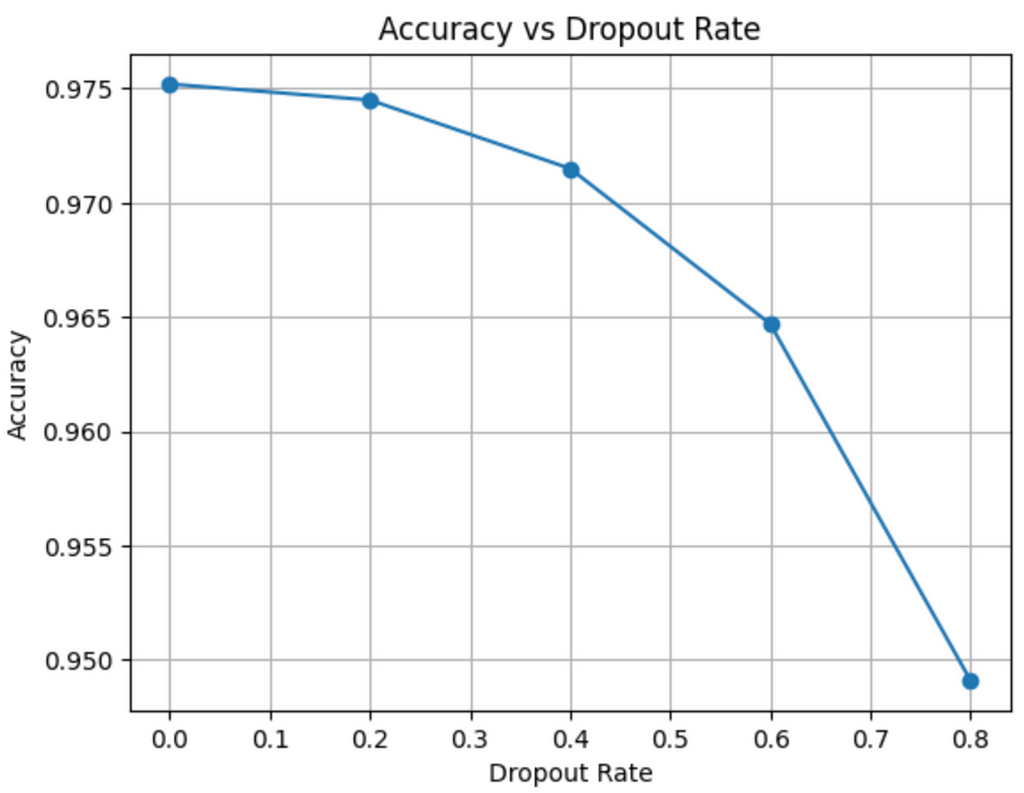

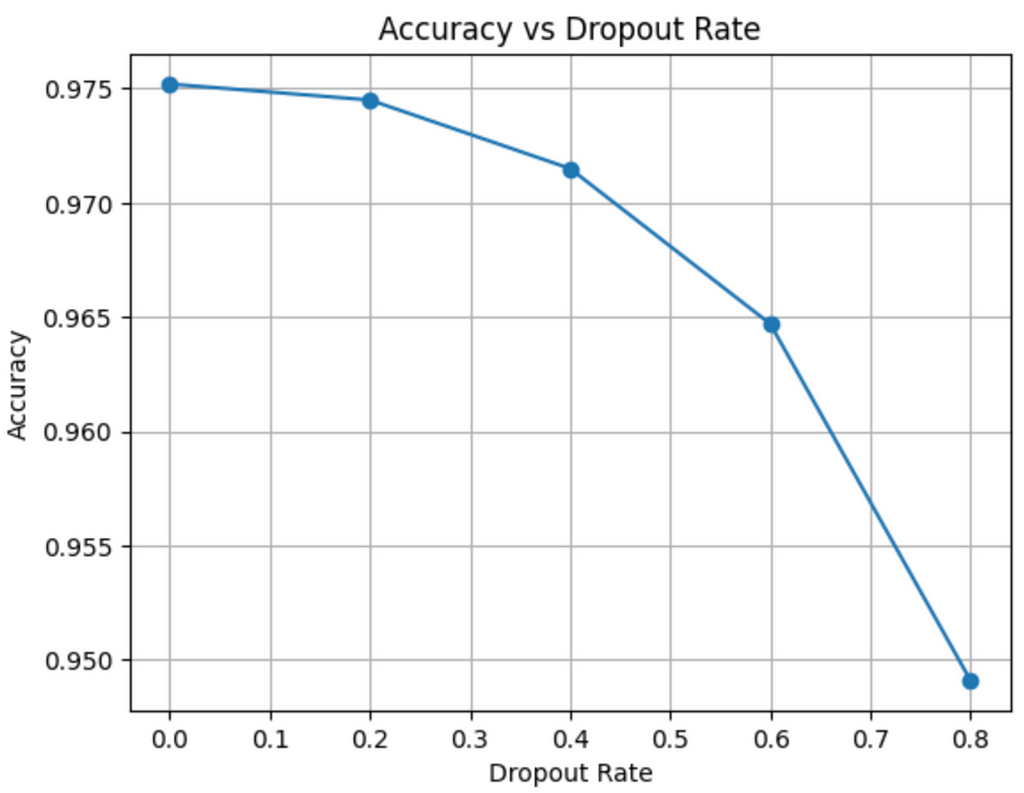

So if we run the above code we see the following result of deactivating increasing percentages of our 128 node MLP.

The results are fairly interesting in this simple example, where as you can see dropping 80% of the neurons barely effects the accuracy, which means that removing excess neurons is certainly an optimization we could consider in building this network.

Graph generated for dropout ablation test (Image by author)

Functional Ablation

For functional ablation, we change the activation functions of the neurons to different curves, with different amounts of non-linearity. The last function we use is a straight line, completely destroying the non-linear characteristic of the model.

Because non-linear models are by definition more complex than linear models, and the purpose of activation functions is to induce nonlinear effects on the classification, a line of reasoning one could make is that:

“If we can get away with using linear functions instead of non-linear functions, and still have a good classification, then maybe we can simply our architecture and lower its cost”

Note: You’ll notice in addition to regularization, certain kinds of ablation testing, like functional ablation has similarities to hyperparameter tuning. They are similar, but ablation testing refers more to changing parts of the neural network architecture (e.g. neurons, layers, etc), where as hyperparameter tuning refers to changing structural parameters of the model. Both have the goal of optimization.

# Activation function ablation

activation_functions = ['relu', 'sigmoid', 'tanh', 'linear']

activation_ablation_accuracies = []

for activation in activation_functions:

model = create_model(activation=activation)

model.fit(x_train, y_train, epochs=5, validation_split=0.2, verbose=0)

loss, accuracy = model.evaluate(x_test, y_test, verbose=0)

activation_ablation_accuracies.append(accuracy)

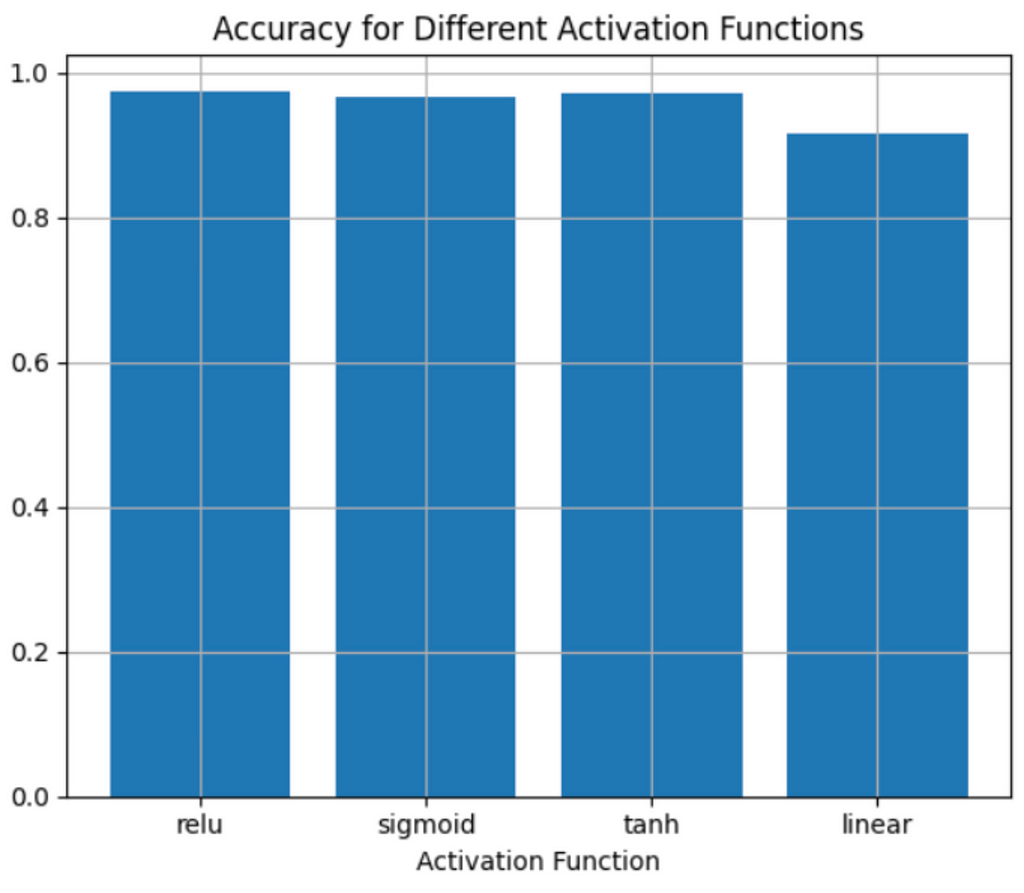

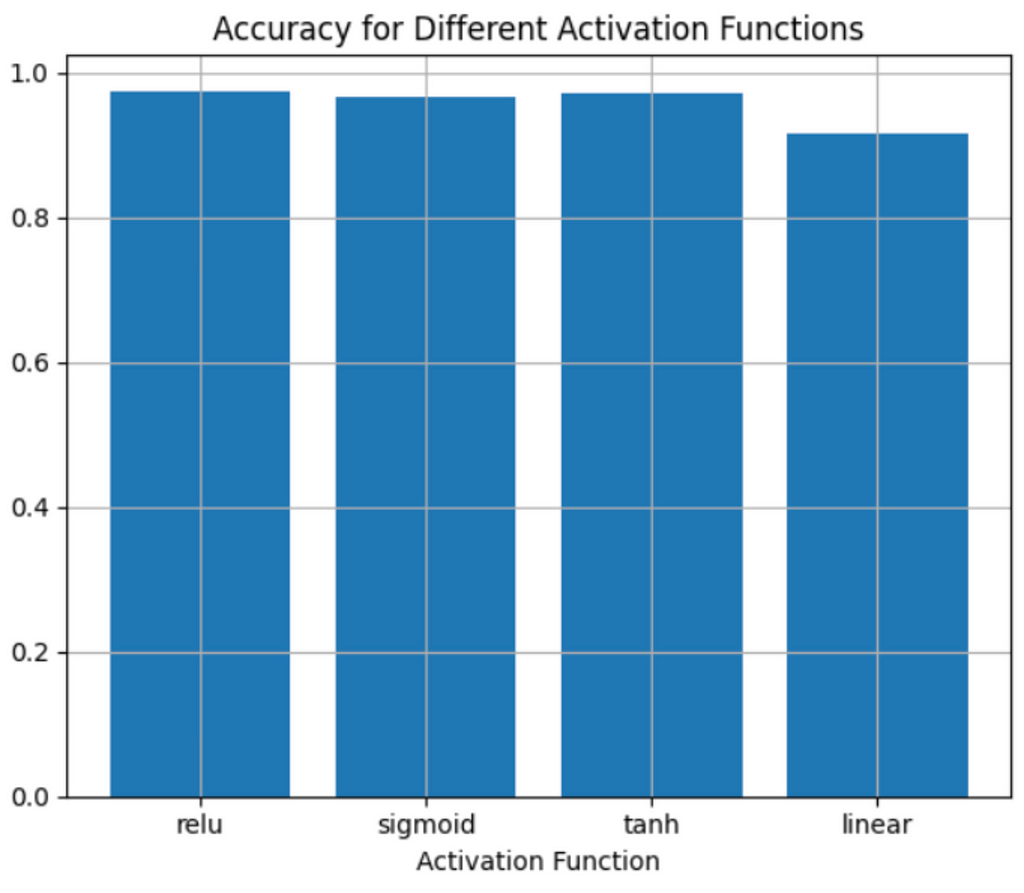

When we run the above code we get the following accuracies vs activation function.

Graph generated for functional ablation test (Image by author)

So it indeed it looks like non-linearity of some kind is important to the classification, with “ReLU” and hyperbolic tangent non-linearity being the most effective. This makes sense, because it’s well known that digit classification is best framed as a non-linear task.

Feature Ablation

We can also remove features from the classification and see how that effects the accuracy of our predictor.

Normally prior to doing a machine learning or data science project, we typically do exploratory data analysis (EDA) and feature selection to determine what features could be important to our classification problem.

But sometimes interesting effects can be observed, especially with the ever mysterious neural networks, by removing features as part of an ablation study and seeing the effect on classification. Using the following code, we can remove columns of pixels from our letters in groups of 4 columns.

Obviously, there are several ways to ablate the features, by distorting the characters in different ways besides in columns. But we can start with this simple example and observe the effects.

# Input feature ablation

input_ablation_accuracies = []

for i in range(0, 28, 4): # Remove columns of pixels groups of 4

x_train_ablated = np.copy(x_train)

x_test_ablated = np.copy(x_test)

x_train_ablated[:, :, i:min(i+4, 28)] = 0

x_test_ablated[:, :, i:min(i+4, 28)] = 0

model = create_model()

model.fit(x_train_ablated, y_train, epochs=5, validation_split=0.2, verbose=0)

loss, accuracy = model.evaluate(x_test_ablated, y_test, verbose=0)

input_ablation_accuracies.append(accuracy)

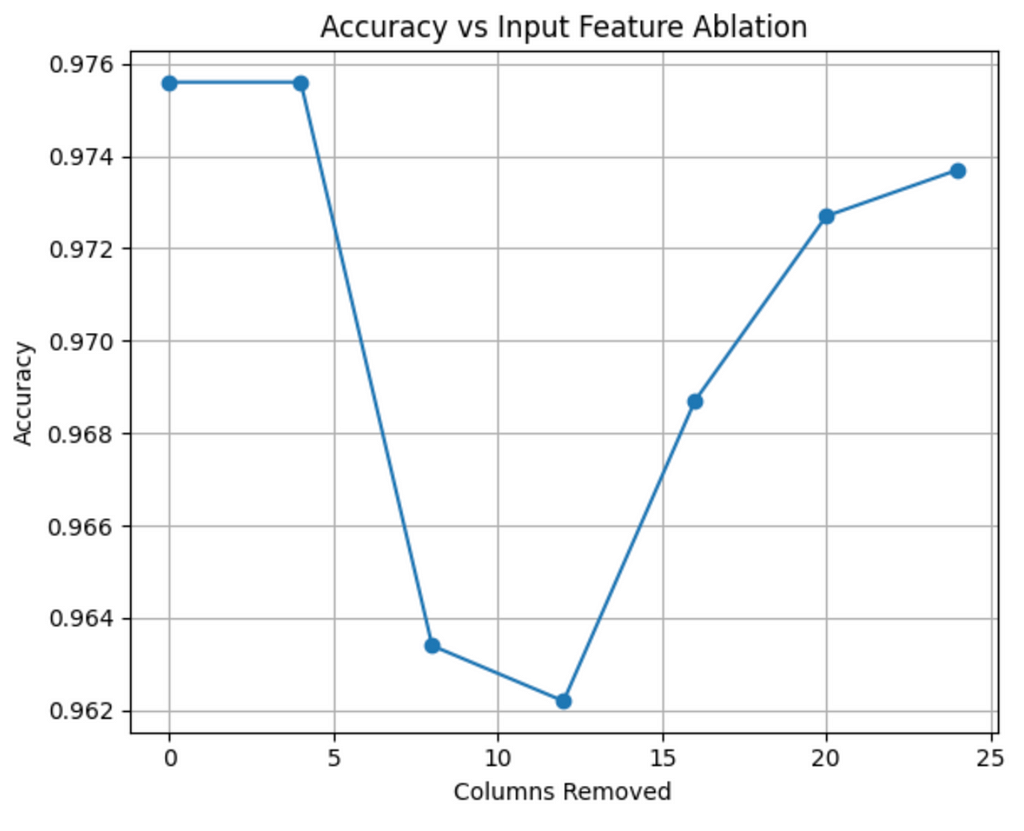

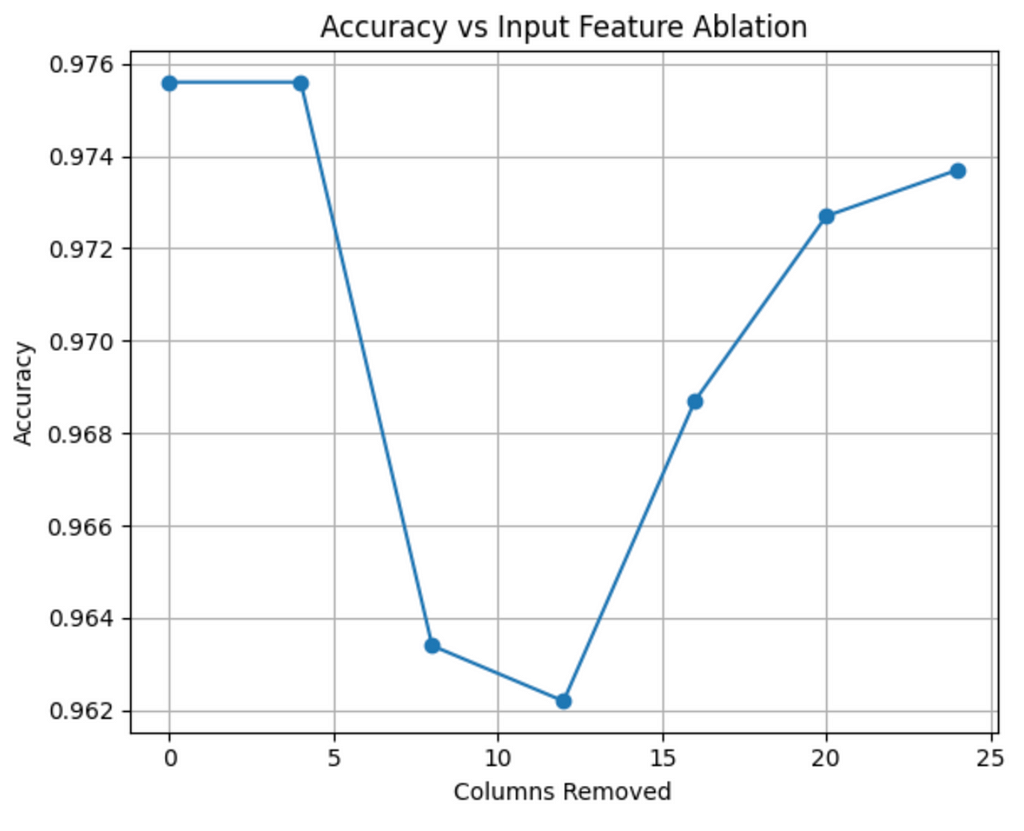

After we run the above feature ablation code, we see:

Graph generated for 4-column input feature ablation test (Image by author)

Interestingly, there’s a slight dip in accuracy when we remove columns 8 to to 12, and a rise again after that. That suggests that on average, the more “sensitive” character geometry lies in those center columns, but the other columns especially close to the beginning and end could potentially be removed for an optimization effect.

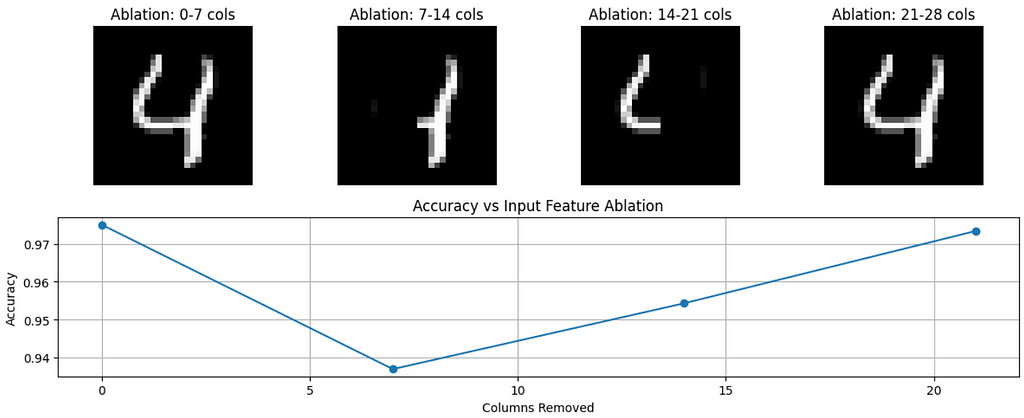

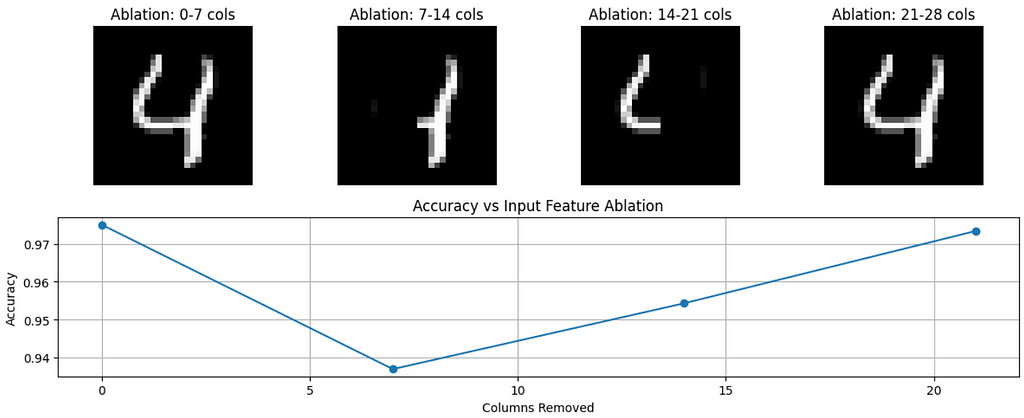

Here’s the same test against removing 7 columns at a time, along with the columns. Visualizing the actual distorted character data allows us to make a lot more sense of the result, as we see that the reason that removing the first few columns makes a smaller different is because they are mostly just padding!

Graph generated for result of 4 column pixel removal (Image by author)

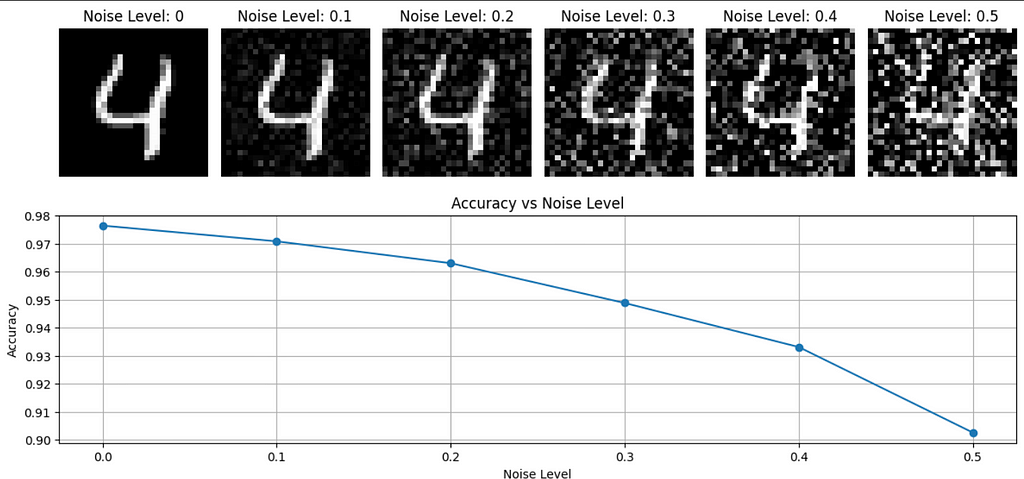

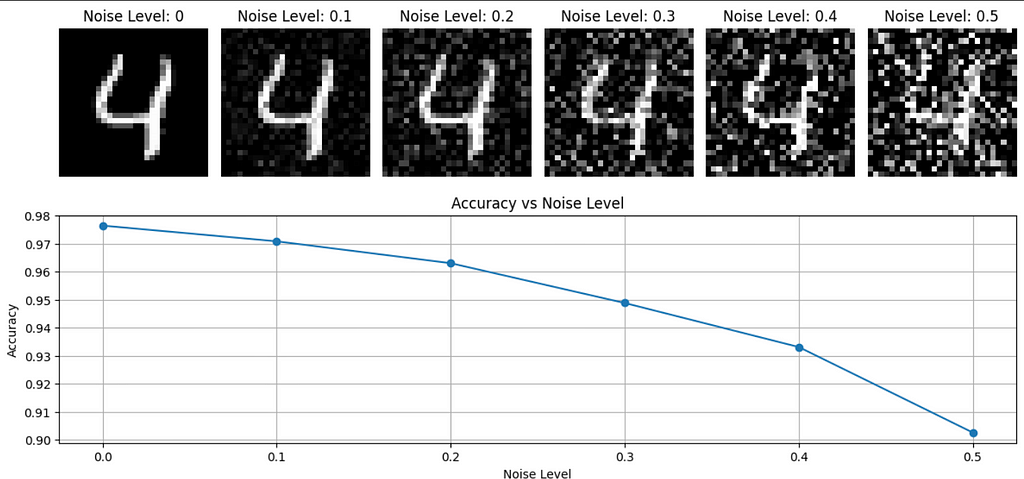

Another interesting example of an ablation study would be testing against different sorts of noise profiles. Here below is code I wrote to progressively noise an image using the above ANN model.

# Ablation study with noise

noise_levels = [0, 0.1, 0.2, 0.3, 0.4, 0.5]

noise_ablation_accuracies = []

plt.figure(figsize=(12, 6))

for i, noise_level in enumerate(noise_levels):

x_train_noisy = x_train + noise_level * np.random.normal(0, 1, x_train.shape)

x_test_noisy = x_test + noise_level * np.random.normal(0, 1, x_test.shape)

x_train_noisy = np.clip(x_train_noisy, 0, 1)

x_test_noisy = np.clip(x_test_noisy, 0, 1)

model = create_model()

model.fit(x_train_noisy, y_train, epochs=5, validation_split=0.2, verbose=0)

loss, accuracy = model.evaluate(x_test_noisy, y_test, verbose=0)

noise_ablation_accuracies.append(accuracy)

# Plot noisy test images

plt.subplot(2, len(noise_levels), i + 1)

for j in range(5): # Display first 5 images

plt.imshow(x_test_noisy[j], cmap='gray')

plt.axis('off')

plt.title(f'Noise Level: {noise_level}')

We’ve created an ablation study for the robustness of the network in the presence of an increasing strength Gaussian Noise. Notice the expected and marked decreasing prediction accuracy as the noise level increases.

Graph generated for result of progressive noising (Image by author)

Situations like this let us know that we may have to increase the power and complexity of our neural network to compensate. Also remember that ablation studies can be done in combination which each other, in the presence of different types of noise combined with different types of distortion.

Conclusions

Ablation studies can be very important to optimizing and testing a neural network. We demonstrated a small example here in this post, but there are an innumerable number of ways to run these studies on different and more complex network architectures. If you have any thoughts, would love some feedback and also perhaps even put them in your own article. Thank you for reading!

Ablation Testing Neural Networks: The Compensatory Masquerade was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

(Image generated by author using DALL-E). Interesting what AI thinks of it’s own brain.

In a similar fashion to how a person’s intellect can be stress tested, Artificial Neural Networks can be subjected to a gamut of tests to evaluate how robust they are to different kinds of disruption, by running what’s called controlled Ablation Testing.

Before we get into ablation testing, lets talk about a familiar technique in “destructive evolution” that many people who study machine learning and artificial intelligence applications might be familiar with: Regularization

Regularization

Regulariztion is a very well known example of ablating, or selectively destroying/deactivating parts of a neural network and re-training it to make it an even more powerful classifier.

Through a process called Dropout, neurons can be deactivated in a controlled way, which allow the work of the neural network that was previously handled by the now defunct neurons, to be taken up by nearby active neurons.

In nature, the brain actually can undergo similar phenomenon due to the concept of neuro-plasticity. If a person suffers brain damage, in some cases nearby neurons and brain structures can reorganize to help take up some of the functionality of the dead brain tissue.

Or how if someone loses one of their senses, like vision, oftentimes their other senses become stronger to make up for their missing capability.

This is also known as the Compensatory Masquerade.

A fully connected Neural Network to the left, and randomized dropout version on the right. In many cases, these networks may actually perform comparatively well (Image by author)

Ablation Testing

While regularization is a technique used in neural networks and other A.I. architectures to aide in training a neural network better through artificial “neuroplasticity”, sometimes we want to just do a similar procedure on a neural network to see how it will behave in the presence of deactivations in terms of accuracy.

We might do this for several other reasons:

- Identifying Critical Parts of a Neural Network: Some parts of a neural network may do more important work than other parts of a neural network. In order to optimize the resource usage and the training time of the network, we can selectively ablate “weaker learners”

- Reducing Complexity of the Neural Network: Sometimes neural networks can get quite large, especially in the case of Deep MLPs (multi layer perceptrons). This can make it difficult to map their behavior from input to output. By selectively shutting of parts of the network, we can potentially identify regions of excessive complexity and remove redundancy — simplifying our architecture.

- Fault Tolerance: In a realtime system, parts of a system can fail. The same applies for parts of a neural network, and thus the systems that depend on their output as we. We can turn to ablation studies to determine if destroying certain parts of the neural network, will cause the predictive or generative power of the system to suffer.

There are actually many different kinds of ablation tests, and here we are going to talk about 3 specific kinds:

- Neuronal Ablation

- Functional Ablation

- Input Ablation

A quick note that ablation tests will have different effects depending on the network you are testing against and the data itself. An ablation test might demonstrate weakness in 1 part of the network for a specific data set, and may demonstrate weakness in another part of the neural network for a different ablation test. That is why that in a truly robust ablation testing system, you will need a wide variety of tests to get an accurate picture of the ANN’s (Artificial Neural Network) weak points.

Neuronal Ablation

This is the first kind of ablation test we are going to run, and it’s the simplest to see the effects of and extend. We will simply remove varying percentages of neurons from our neural network

For our experiment we have a simple ANN set up to test the accuracy of random character prediction agains using our old friend the MNIST data set.

A snapshot of digit data from the MNIST data set (Image by author)

Here is the code I wrote as a simple ANN test harness to test digit classification accuracy.

import tensorflow as tf

from tensorflow.keras.datasets import mnist

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense, Dropout, Flatten

from tensorflow.keras.optimizers import Adam

import matplotlib.pyplot as plt

(x_train, y_train), (x_test, y_test) = mnist.load_data()

x_train, x_test = x_train / 255.0, x_test / 255.0

# Create the ANN Model

def create_model(dropout_rate=0.0):

model = Sequential([

Flatten(input_shape=(28, 28)),

Dense(128, activation='relu'),

Dropout(dropout_rate),

Dense(10, activation='softmax')

])

model.compile(optimizer=Adam(),

loss='sparse_categorical_crossentropy',

metrics=['accuracy'])

return model

# Run the ablation study: Dropout percentages of neurons

dropout_rates = [0.0, 0.2, 0.4, 0.6, 0.8]

accuracies = []

for rate in dropout_rates:

model = create_model(dropout_rate=rate)

model.fit(x_train, y_train, epochs=5, validation_split=0.2, verbose=0)

loss, accuracy = model.evaluate(x_test, y_test, verbose=0)

accuracies.append(accuracy)

plt.plot(dropout_rates, accuracies, marker='o')

plt.title('Accuracy vs Dropout Rate')

plt.xlabel('Dropout Rate')

plt.ylabel('Accuracy')

plt.grid(True)

plt.show()

So if we run the above code we see the following result of deactivating increasing percentages of our 128 node MLP.

The results are fairly interesting in this simple example, where as you can see dropping 80% of the neurons barely effects the accuracy, which means that removing excess neurons is certainly an optimization we could consider in building this network.

Graph generated for dropout ablation test (Image by author)

Functional Ablation

For functional ablation, we change the activation functions of the neurons to different curves, with different amounts of non-linearity. The last function we use is a straight line, completely destroying the non-linear characteristic of the model.

Because non-linear models are by definition more complex than linear models, and the purpose of activation functions is to induce nonlinear effects on the classification, a line of reasoning one could make is that:

“If we can get away with using linear functions instead of non-linear functions, and still have a good classification, then maybe we can simply our architecture and lower its cost”

Note: You’ll notice in addition to regularization, certain kinds of ablation testing, like functional ablation has similarities to hyperparameter tuning. They are similar, but ablation testing refers more to changing parts of the neural network architecture (e.g. neurons, layers, etc), where as hyperparameter tuning refers to changing structural parameters of the model. Both have the goal of optimization.

# Activation function ablation

activation_functions = ['relu', 'sigmoid', 'tanh', 'linear']

activation_ablation_accuracies = []

for activation in activation_functions:

model = create_model(activation=activation)

model.fit(x_train, y_train, epochs=5, validation_split=0.2, verbose=0)

loss, accuracy = model.evaluate(x_test, y_test, verbose=0)

activation_ablation_accuracies.append(accuracy)

When we run the above code we get the following accuracies vs activation function.

Graph generated for functional ablation test (Image by author)

So it indeed it looks like non-linearity of some kind is important to the classification, with “ReLU” and hyperbolic tangent non-linearity being the most effective. This makes sense, because it’s well known that digit classification is best framed as a non-linear task.

Feature Ablation

We can also remove features from the classification and see how that effects the accuracy of our predictor.

Normally prior to doing a machine learning or data science project, we typically do exploratory data analysis (EDA) and feature selection to determine what features could be important to our classification problem.

But sometimes interesting effects can be observed, especially with the ever mysterious neural networks, by removing features as part of an ablation study and seeing the effect on classification. Using the following code, we can remove columns of pixels from our letters in groups of 4 columns.

Obviously, there are several ways to ablate the features, by distorting the characters in different ways besides in columns. But we can start with this simple example and observe the effects.

# Input feature ablation

input_ablation_accuracies = []

for i in range(0, 28, 4): # Remove columns of pixels groups of 4

x_train_ablated = np.copy(x_train)

x_test_ablated = np.copy(x_test)

x_train_ablated[:, :, i:min(i+4, 28)] = 0

x_test_ablated[:, :, i:min(i+4, 28)] = 0

model = create_model()

model.fit(x_train_ablated, y_train, epochs=5, validation_split=0.2, verbose=0)

loss, accuracy = model.evaluate(x_test_ablated, y_test, verbose=0)

input_ablation_accuracies.append(accuracy)

After we run the above feature ablation code, we see:

Graph generated for 4-column input feature ablation test (Image by author)

Interestingly, there’s a slight dip in accuracy when we remove columns 8 to to 12, and a rise again after that. That suggests that on average, the more “sensitive” character geometry lies in those center columns, but the other columns especially close to the beginning and end could potentially be removed for an optimization effect.

Here’s the same test against removing 7 columns at a time, along with the columns. Visualizing the actual distorted character data allows us to make a lot more sense of the result, as we see that the reason that removing the first few columns makes a smaller different is because they are mostly just padding!

Graph generated for result of 4 column pixel removal (Image by author)

Another interesting example of an ablation study would be testing against different sorts of noise profiles. Here below is code I wrote to progressively noise an image using the above ANN model.

# Ablation study with noise

noise_levels = [0, 0.1, 0.2, 0.3, 0.4, 0.5]

noise_ablation_accuracies = []

plt.figure(figsize=(12, 6))

for i, noise_level in enumerate(noise_levels):

x_train_noisy = x_train + noise_level * np.random.normal(0, 1, x_train.shape)

x_test_noisy = x_test + noise_level * np.random.normal(0, 1, x_test.shape)

x_train_noisy = np.clip(x_train_noisy, 0, 1)

x_test_noisy = np.clip(x_test_noisy, 0, 1)

model = create_model()

model.fit(x_train_noisy, y_train, epochs=5, validation_split=0.2, verbose=0)

loss, accuracy = model.evaluate(x_test_noisy, y_test, verbose=0)

noise_ablation_accuracies.append(accuracy)

# Plot noisy test images

plt.subplot(2, len(noise_levels), i + 1)

for j in range(5): # Display first 5 images

plt.imshow(x_test_noisy[j], cmap='gray')

plt.axis('off')

plt.title(f'Noise Level: {noise_level}')

We’ve created an ablation study for the robustness of the network in the presence of an increasing strength Gaussian Noise. Notice the expected and marked decreasing prediction accuracy as the noise level increases.

Graph generated for result of progressive noising (Image by author)

Situations like this let us know that we may have to increase the power and complexity of our neural network to compensate. Also remember that ablation studies can be done in combination which each other, in the presence of different types of noise combined with different types of distortion.

Conclusions

Ablation studies can be very important to optimizing and testing a neural network. We demonstrated a small example here in this post, but there are an innumerable number of ways to run these studies on different and more complex network architectures. If you have any thoughts, would love some feedback and also perhaps even put them in your own article. Thank you for reading!

Ablation Testing Neural Networks: The Compensatory Masquerade was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.