Creating a Climate GPT Using NASA’s Power API

Image created in ChatGPT

TLDR

In this article we explore OpenAI’s new GPTs feature, which offers a no-code way to quickly create AI agents that can automatically call external APIs to get data as well as generate code to answer data analysis questions. In just a few hours we built a chatbot that can answer questions about climate based on data from the NASA Power API as well as carry out data analysis tasks. The GPT user experience OpenAI has created is excellent, significantly lowering the barrier to creating state-of-the-art AI agents. That said, the external API calling configuration can be a bit technically challenging and requires that the API has an openapi.json file available. Also, cost is still an unknown, and while in preview it would seem that GPTs have some caps on the number of interactions allowed per day. However, with the imminent launch of OpenAI’s GPT store, we may see an explosion in these GPT AI agents and even now they offer some amazing capabilities.

What Are GPT’s?

GPTs were recently launched by OpenAI and offer a way for non-technical users to create AI chat agents powered by the powerful GPT-4 Large Language Model (LLM). Though it’s been possible to do most of what GPTs offer for some time through 3rd party libraries like LangChain and autogen, GPTs offer a native solution. With that comes a slick easy-to-use interface and tight integration with the OpenAI ecosystem. Importantly, they will soon also be available in a new GPT store, raising the possibility that we could be seeing an App store situation and the explosion of AI agents. Or not, it’s difficult to tell, but the potential is definitely there.

GPTs come with some very powerful features, notably the ability to surf the web, generate and run code, and the killer feature, the ability to communicate with APIs to get external data. The last one is very powerful as it means it should be easy to create an AI agent on top of any data store that presents data using an API.

Creating a GPT

GPTs are currently only available to ChatGPT plus subscribers. To create one, you will need to visit chat.openai.com/create which will prompt you for some details about what your GPT will do and the thumbnail image you’d like to use (it can be generated automatically using DALL-E-3).

For this analysis I used the prompt “Create a climate indicators chatbot that uses the NASA Power API to get data”. This created a GPT with the following system prompt (in the ‘instructions’ field under ‘Configure’) …

The GPT is designed as a NASA Power API Bot, specialized in retrieving and

interpreting climate data for various locations. Its primary role is to

assist users in accessing and understanding climate-related information,

specifically by interfacing with NASA's Power API. It should focus on

providing accurate, up-to-date climate data such as temperature,

precipitation, solar radiation, and other relevant environmental

parameters.

To ensure accuracy and relevancy, the bot should avoid speculating on

data outside its provided scope and not offer predictions or

interpretations beyond what the API data supports. It should guide users in

formulating requests for data and clarify when additional details are

needed for a precise query.

In interactions, the bot should be factual and straightforward,

emphasizing clarity in presenting data. It should offer guidance on how

to interpret the data when necessary but maintain a neutral, informative

tone without personalization or humor.

The bot should explicitly ask for clarification if a user's request is

vague or lacks specific details needed to fetch the relevant data from

the NASA Power API.

Which seems very reasonable based on the single sentence I provided. This can of course be adjusted to taste, and as we shall see below is also a good place to guide the chatbot regarding API calls.

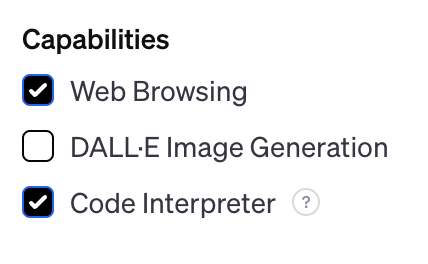

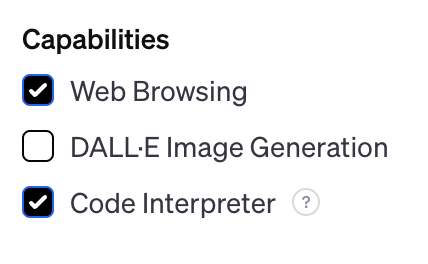

Configuring Capabilities

The GPT can be configured with various capabilities. For our analysis, we will deactivate the ability to generate images and keep the ability to browse the web and generate and run code with Code Interpreter. For a production GPT I would probably deactivate web access and ensure all required data is provided by specified APIs, but for our analysis we’ll leave it on as it’s handy for getting latitude and longitude for places, required for calling the NASA Power API.

Configuring API Access

This is the real heart of data-driven GPTs, configuring the API integration. To do this you will need to click ‘Configure’ at the top of your GPT, and scroll down, and click ‘Create Action’ …

Configuring a GPT to communicate with NASA’s Power API for climate data

This opens a section where you can provide details of your API, either by providing a link to or pasting an openapi.json (previously swagger) API definition.

This of course raises a constraint that the external API needs to have an available openapi.json file. Though very common it isn’t true for many important APIs. Also, the default openapi.json often needs a little adjustment for the GPT to work.

The NASA Power API

For this analysis, we will use NASA’s Prediction of Worldwide Energy Resources (POWER) API to get climate indicators. This amazing project combines a wide range of data and model simulations to provide a set of APIs for climate indicators at point locations. There are a few API endpoints, for this analysis we will use the Indicators API which includes an openapi.json specification that was pasted in the GPT’s action configuration pane. It needed a little manipulation to (i) Ensure any parameter descriptions were under a 300-character limit; (ii) Add a ‘servers’ section …

"servers": [

{

"url": "https://power.larc.nasa.gov"

}

],

When all exceptions in the GPT user interface were resolved, the end-point specified in the openapi.json appeared …

NASA Power API indicators endpoints which the GPT user interface displays for a slightly adjusted openapi.json specification

I would have added other APIs, such as climatology, but OpenAI does not support multiple actions with the same end-point domain, ie I couldn’t create an action for each openapi.json NASA provides. I would have to merge them into one larger openapi.json file, not terribly difficult, but I opted to keep things simple for this analysis and use only the indicators end-point.

Adjusting the System Prompt

From some experimentation with the API directly, I found that the field ‘user’ was not provided in each call which resulted in an API exception. To get around this I added this to the system prompt …

ALWAYS set 'user' API query parameter to be '<MY API ID>'

Where I created an alphanumeric user ID for the API calls.

Testing Our GPT

In the GPT Edit screen, the left pane is for adjusting configuration, the right is for preview. I found the preview provides some extra debugging information available in the published GPT, especially useful for investigating API issues.

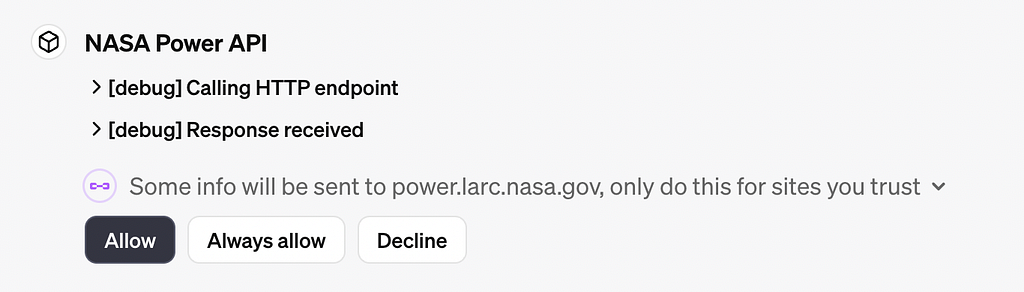

On asking “What is the mean average rainfall in Tokyo”, I was presented with a confirmation to use the API …

On the first use of an API action, the GPT owner is prompted for confirmation

I selected “Always” and the GPT called the API. However, it got a response stating that a year range was required …

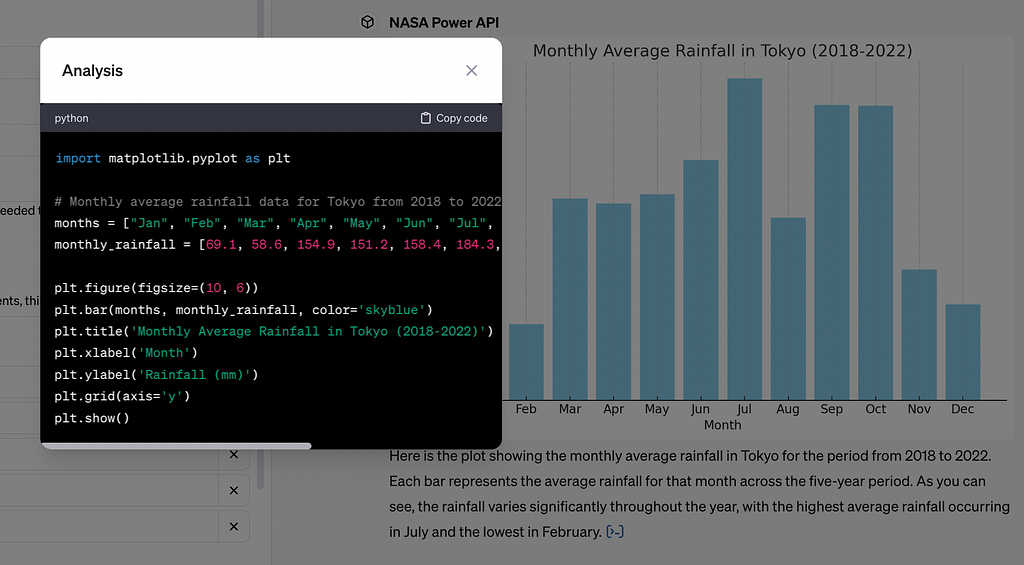

This is rather cool, it already suggests a solution to use years 2018–2022, which I accept by responding “Yes” …

GPT was able to retrieve and present Tokyo’s average rainfall using NASA’s Power API

Using the ‘Try it out’ button on the API page, entering the above year range and the latitude/longitude of Tokyo as 35.6895/139.6917 I get a response. Since I wasn’t familiar with variable names, I asked the GPT …

The GPT is helpful in presenting API variable names

Going back to the API response we see that …

"PRECTOTCORR": {

"1": 69.1,

"2": 58.6,

"3": 154.9,

"4": 151.2,

"5": 158.4,

"6": 184.3,

"7": 247.3,

"8": 140.4,

"9": 226.8,

"10": 226.2,

"11": 100.3,

"12": 73.7

},

Hmm, so the underlying API data is actually providing data for each of the 12 months, but the GPT response took the first 5 and presented them as yearly averages.

So things looked great, but as we often find, we need some taming of the LLM to avoid hallucination. Let’s provide a bit more context about the API in the ‘Instructions’ section of the GPT configuration …

The API provides data averaged for the year range specified. If and data is

returned with 12 elements, this is likely a list of monthly means.

Trying again “What is the mean average rainfall in Tokyo” …

Which is now correct. A good example of improving performance through a little prompting.

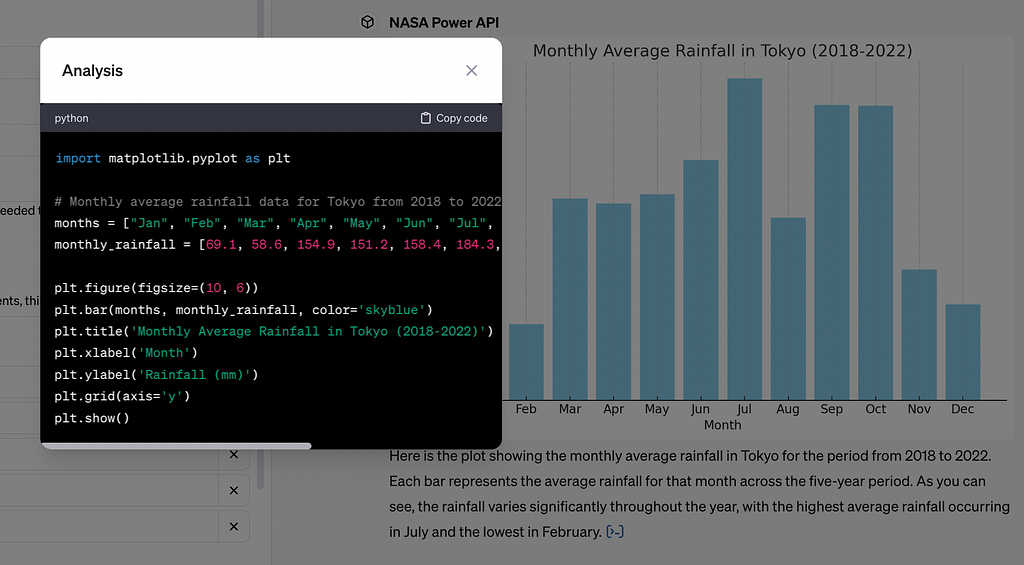

Let’s ask it to do some analysis by asking “Please plot monthly averages” …

GPTs can run code using data they retrieved from APIs to provide basic data analysis

This is very nifty! The values in the above plot align with those retrieved directly in the API. At the end of its response, there is a link where you can review the code it generated and ran …

OK, now let’s try something more involved. First I asked what variables are available …

GPTs can interrogate documentation API endpoints

It automatically accessed the configuration endpoint to get metadata. Using this, let’s ask “Is relative humidity correlated with solar radiation in Neath, South Wales in the last 5 years” …

It queried the API to get data, and then generated code to create a plot. Pretty amazing, but didn’t answer the full question, so let’s insist by asking “Please calculate the correlation coefficient” …

Clicking the link to see the code …

import pandas as pd

import seaborn as sns

# Monthly data for relative humidity and solar radiation in Neath, South Wales from 2018 to 2022

months = ["Jan", "Feb", "Mar", "Apr", "May", "Jun", "Jul", "Aug", "Sep", "Oct", "Nov", "Dec"]

relative_humidity = [4.2, 4.3, 4.7, 4.6, 4.0, 4.0, 3.7, 3.4, 3.4, 3.3, 3.1, 3.5] # Sampled values from MCWBR_DB or similar

solar_radiation = [0.84, 1.65, 2.81, 4.35, 5.36, 5.45, 5.45, 4.34, 3.29, 1.94, 1.03, 0.65] # Sampled from RADIATION_AVG

# Creating a DataFrame

data = pd.DataFrame({'Month': months, 'Relative Humidity': relative_humidity, 'Solar Radiation': solar_radiation})

# Plotting

plt.figure(figsize=(10, 6))

sns.scatterplot(data=data, x='Relative Humidity', y='Solar Radiation')

plt.title('Correlation between Relative Humidity and Solar Radiation in Neath, South Wales (2018-2022)')

plt.xlabel('Relative Humidity')

plt.ylabel('Solar Radiation')

plt.grid(True)

plt.show()

# Calculating the correlation coefficient between relative humidity and solar radiation

correlation = data['Relative Humidity'].corr(data['Solar Radiation'])

correlation

Which is reasonable.

OK, what about multiple location comparisons? First, I added this to the Instructions (system prompt) just to be clear …

Is asked about multiple locations call the API for each location to get data.

Now, Let’s ask “Was Svalbard more humid than Bargoed Wales in the last 5 years?” …

Since the API needs latitude and longitude, the GPT confirms the approach. If we had configured a geocoding API as an action, this wouldn’t be required, but for now using central coordinates will suffice.

The GPT called the API for both locations, extracted data, and compared …

I grew up in Bargoed, and can honestly say it’s a VERY rainy place. Calling the API directly, the above values are correct.

Limitations

During this analysis a few challenges presented themselves.

First, there seems to be a limit on how many GPT-4 interactions are allowed per day. This was reached after an hour or two of testing, which seemed to be lower than published GPT-4 limits, so it might be related to the preview nature of GPTs. This would prevent any production rollout, but one hopes it will be resolved as part of the GPT store launch.

Performance can also be a bit slow at times, but given the GPT was calling an external API and running code, not unreasonably so. The UX is very good, indicating clearly to the user that things are in progress.

Cost is an unknown, or at least, we couldn’t see any significant impact on costs but will continue to track this. GPTs generate code and analyze lengthy responses from APIs, so token costs may well be the blocker for many organizations in using them.

Conclusions and Future Work

In this analysis, we only used the ‘indicators’ NASA Power API endpoints. It wouldn’t be much work to use all of NASA’s Power endpoints and incorporate geocoding to create a really comprehensive climate chatbot.

GPTs offer a low-code way to develop state-of-the-art AI agents that are able to interface automatically with APIs and generate code to perform data analysis. They are potentially game-changing, we were able to create a fairly advanced climate chatbot in just a few hours without writing a line of code!

They are by no means perfect yet, the configuration UX is very, very good, but there are some areas such as API error reporting where the user is left guessing. External API setup requires technical knowledge, and some APIs might be missing the required openapi.json, making them harder to implement. Cost may also be prohibitive, but it’s difficult to say yet as GPTs are in preview. As always with any LLM application, much of the work will be to ensure factual correctness and the typical design and engineering workflows needed for any software project still of course apply.

GPTs are amazing, but aren’t magic … yet.

References

For NASA’s Prediction of Worldwide Energy Resources (POWER): “These data were obtained from the NASA Langley Research Center (LaRC) POWER Project funded through the NASA Earth Science/Applied Science Program.”

Developing a Climate GPT Using NASA’s Power API was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

Image created in ChatGPT

TLDR

In this article we explore OpenAI’s new GPTs feature, which offers a no-code way to quickly create AI agents that can automatically call external APIs to get data as well as generate code to answer data analysis questions. In just a few hours we built a chatbot that can answer questions about climate based on data from the NASA Power API as well as carry out data analysis tasks. The GPT user experience OpenAI has created is excellent, significantly lowering the barrier to creating state-of-the-art AI agents. That said, the external API calling configuration can be a bit technically challenging and requires that the API has an openapi.json file available. Also, cost is still an unknown, and while in preview it would seem that GPTs have some caps on the number of interactions allowed per day. However, with the imminent launch of OpenAI’s GPT store, we may see an explosion in these GPT AI agents and even now they offer some amazing capabilities.

What Are GPT’s?

GPTs were recently launched by OpenAI and offer a way for non-technical users to create AI chat agents powered by the powerful GPT-4 Large Language Model (LLM). Though it’s been possible to do most of what GPTs offer for some time through 3rd party libraries like LangChain and autogen, GPTs offer a native solution. With that comes a slick easy-to-use interface and tight integration with the OpenAI ecosystem. Importantly, they will soon also be available in a new GPT store, raising the possibility that we could be seeing an App store situation and the explosion of AI agents. Or not, it’s difficult to tell, but the potential is definitely there.

GPTs come with some very powerful features, notably the ability to surf the web, generate and run code, and the killer feature, the ability to communicate with APIs to get external data. The last one is very powerful as it means it should be easy to create an AI agent on top of any data store that presents data using an API.

Creating a GPT

GPTs are currently only available to ChatGPT plus subscribers. To create one, you will need to visit chat.openai.com/create which will prompt you for some details about what your GPT will do and the thumbnail image you’d like to use (it can be generated automatically using DALL-E-3).

For this analysis I used the prompt “Create a climate indicators chatbot that uses the NASA Power API to get data”. This created a GPT with the following system prompt (in the ‘instructions’ field under ‘Configure’) …

The GPT is designed as a NASA Power API Bot, specialized in retrieving and

interpreting climate data for various locations. Its primary role is to

assist users in accessing and understanding climate-related information,

specifically by interfacing with NASA's Power API. It should focus on

providing accurate, up-to-date climate data such as temperature,

precipitation, solar radiation, and other relevant environmental

parameters.

To ensure accuracy and relevancy, the bot should avoid speculating on

data outside its provided scope and not offer predictions or

interpretations beyond what the API data supports. It should guide users in

formulating requests for data and clarify when additional details are

needed for a precise query.

In interactions, the bot should be factual and straightforward,

emphasizing clarity in presenting data. It should offer guidance on how

to interpret the data when necessary but maintain a neutral, informative

tone without personalization or humor.

The bot should explicitly ask for clarification if a user's request is

vague or lacks specific details needed to fetch the relevant data from

the NASA Power API.

Which seems very reasonable based on the single sentence I provided. This can of course be adjusted to taste, and as we shall see below is also a good place to guide the chatbot regarding API calls.

Configuring Capabilities

The GPT can be configured with various capabilities. For our analysis, we will deactivate the ability to generate images and keep the ability to browse the web and generate and run code with Code Interpreter. For a production GPT I would probably deactivate web access and ensure all required data is provided by specified APIs, but for our analysis we’ll leave it on as it’s handy for getting latitude and longitude for places, required for calling the NASA Power API.

Configuring API Access

This is the real heart of data-driven GPTs, configuring the API integration. To do this you will need to click ‘Configure’ at the top of your GPT, and scroll down, and click ‘Create Action’ …

Configuring a GPT to communicate with NASA’s Power API for climate data

This opens a section where you can provide details of your API, either by providing a link to or pasting an openapi.json (previously swagger) API definition.

This of course raises a constraint that the external API needs to have an available openapi.json file. Though very common it isn’t true for many important APIs. Also, the default openapi.json often needs a little adjustment for the GPT to work.

The NASA Power API

For this analysis, we will use NASA’s Prediction of Worldwide Energy Resources (POWER) API to get climate indicators. This amazing project combines a wide range of data and model simulations to provide a set of APIs for climate indicators at point locations. There are a few API endpoints, for this analysis we will use the Indicators API which includes an openapi.json specification that was pasted in the GPT’s action configuration pane. It needed a little manipulation to (i) Ensure any parameter descriptions were under a 300-character limit; (ii) Add a ‘servers’ section …

"servers": [

{

"url": "https://power.larc.nasa.gov"

}

],

When all exceptions in the GPT user interface were resolved, the end-point specified in the openapi.json appeared …

NASA Power API indicators endpoints which the GPT user interface displays for a slightly adjusted openapi.json specification

I would have added other APIs, such as climatology, but OpenAI does not support multiple actions with the same end-point domain, ie I couldn’t create an action for each openapi.json NASA provides. I would have to merge them into one larger openapi.json file, not terribly difficult, but I opted to keep things simple for this analysis and use only the indicators end-point.

Adjusting the System Prompt

From some experimentation with the API directly, I found that the field ‘user’ was not provided in each call which resulted in an API exception. To get around this I added this to the system prompt …

ALWAYS set 'user' API query parameter to be '<MY API ID>'

Where I created an alphanumeric user ID for the API calls.

Testing Our GPT

In the GPT Edit screen, the left pane is for adjusting configuration, the right is for preview. I found the preview provides some extra debugging information available in the published GPT, especially useful for investigating API issues.

On asking “What is the mean average rainfall in Tokyo”, I was presented with a confirmation to use the API …

On the first use of an API action, the GPT owner is prompted for confirmation

I selected “Always” and the GPT called the API. However, it got a response stating that a year range was required …

This is rather cool, it already suggests a solution to use years 2018–2022, which I accept by responding “Yes” …

GPT was able to retrieve and present Tokyo’s average rainfall using NASA’s Power API

Using the ‘Try it out’ button on the API page, entering the above year range and the latitude/longitude of Tokyo as 35.6895/139.6917 I get a response. Since I wasn’t familiar with variable names, I asked the GPT …

The GPT is helpful in presenting API variable names

Going back to the API response we see that …

"PRECTOTCORR": {

"1": 69.1,

"2": 58.6,

"3": 154.9,

"4": 151.2,

"5": 158.4,

"6": 184.3,

"7": 247.3,

"8": 140.4,

"9": 226.8,

"10": 226.2,

"11": 100.3,

"12": 73.7

},

Hmm, so the underlying API data is actually providing data for each of the 12 months, but the GPT response took the first 5 and presented them as yearly averages.

So things looked great, but as we often find, we need some taming of the LLM to avoid hallucination. Let’s provide a bit more context about the API in the ‘Instructions’ section of the GPT configuration …

The API provides data averaged for the year range specified. If and data is

returned with 12 elements, this is likely a list of monthly means.

Trying again “What is the mean average rainfall in Tokyo” …

Which is now correct. A good example of improving performance through a little prompting.

Let’s ask it to do some analysis by asking “Please plot monthly averages” …

GPTs can run code using data they retrieved from APIs to provide basic data analysis

This is very nifty! The values in the above plot align with those retrieved directly in the API. At the end of its response, there is a link where you can review the code it generated and ran …

OK, now let’s try something more involved. First I asked what variables are available …

GPTs can interrogate documentation API endpoints

It automatically accessed the configuration endpoint to get metadata. Using this, let’s ask “Is relative humidity correlated with solar radiation in Neath, South Wales in the last 5 years” …

It queried the API to get data, and then generated code to create a plot. Pretty amazing, but didn’t answer the full question, so let’s insist by asking “Please calculate the correlation coefficient” …

Clicking the link to see the code …

import pandas as pd

import seaborn as sns

# Monthly data for relative humidity and solar radiation in Neath, South Wales from 2018 to 2022

months = ["Jan", "Feb", "Mar", "Apr", "May", "Jun", "Jul", "Aug", "Sep", "Oct", "Nov", "Dec"]

relative_humidity = [4.2, 4.3, 4.7, 4.6, 4.0, 4.0, 3.7, 3.4, 3.4, 3.3, 3.1, 3.5] # Sampled values from MCWBR_DB or similar

solar_radiation = [0.84, 1.65, 2.81, 4.35, 5.36, 5.45, 5.45, 4.34, 3.29, 1.94, 1.03, 0.65] # Sampled from RADIATION_AVG

# Creating a DataFrame

data = pd.DataFrame({'Month': months, 'Relative Humidity': relative_humidity, 'Solar Radiation': solar_radiation})

# Plotting

plt.figure(figsize=(10, 6))

sns.scatterplot(data=data, x='Relative Humidity', y='Solar Radiation')

plt.title('Correlation between Relative Humidity and Solar Radiation in Neath, South Wales (2018-2022)')

plt.xlabel('Relative Humidity')

plt.ylabel('Solar Radiation')

plt.grid(True)

plt.show()

# Calculating the correlation coefficient between relative humidity and solar radiation

correlation = data['Relative Humidity'].corr(data['Solar Radiation'])

correlation

Which is reasonable.

OK, what about multiple location comparisons? First, I added this to the Instructions (system prompt) just to be clear …

Is asked about multiple locations call the API for each location to get data.

Now, Let’s ask “Was Svalbard more humid than Bargoed Wales in the last 5 years?” …

Since the API needs latitude and longitude, the GPT confirms the approach. If we had configured a geocoding API as an action, this wouldn’t be required, but for now using central coordinates will suffice.

The GPT called the API for both locations, extracted data, and compared …

I grew up in Bargoed, and can honestly say it’s a VERY rainy place. Calling the API directly, the above values are correct.

Limitations

During this analysis a few challenges presented themselves.

First, there seems to be a limit on how many GPT-4 interactions are allowed per day. This was reached after an hour or two of testing, which seemed to be lower than published GPT-4 limits, so it might be related to the preview nature of GPTs. This would prevent any production rollout, but one hopes it will be resolved as part of the GPT store launch.

Performance can also be a bit slow at times, but given the GPT was calling an external API and running code, not unreasonably so. The UX is very good, indicating clearly to the user that things are in progress.

Cost is an unknown, or at least, we couldn’t see any significant impact on costs but will continue to track this. GPTs generate code and analyze lengthy responses from APIs, so token costs may well be the blocker for many organizations in using them.

Conclusions and Future Work

In this analysis, we only used the ‘indicators’ NASA Power API endpoints. It wouldn’t be much work to use all of NASA’s Power endpoints and incorporate geocoding to create a really comprehensive climate chatbot.

GPTs offer a low-code way to develop state-of-the-art AI agents that are able to interface automatically with APIs and generate code to perform data analysis. They are potentially game-changing, we were able to create a fairly advanced climate chatbot in just a few hours without writing a line of code!

They are by no means perfect yet, the configuration UX is very, very good, but there are some areas such as API error reporting where the user is left guessing. External API setup requires technical knowledge, and some APIs might be missing the required openapi.json, making them harder to implement. Cost may also be prohibitive, but it’s difficult to say yet as GPTs are in preview. As always with any LLM application, much of the work will be to ensure factual correctness and the typical design and engineering workflows needed for any software project still of course apply.

GPTs are amazing, but aren’t magic … yet.

References

For NASA’s Prediction of Worldwide Energy Resources (POWER): “These data were obtained from the NASA Langley Research Center (LaRC) POWER Project funded through the NASA Earth Science/Applied Science Program.”

Developing a Climate GPT Using NASA’s Power API was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.